Sixty-five million years ago, an asteroid hit the earth in what is now the Gulf of Mexico and the resulting environmental changes killed off the dinosaurs, opening the way for the subsequent evolution of mammals.

Or not. This scenario has been taught to schoolchildren for decades, but Gerta Keller and Thierry Addate, geoscientists at Princeton University and the University of Lausanne, Switzerland, have reanalyzed old data and collected new and they suggest that the asteroid hit 300,000 years before the mass extinction. Theirs isn't a paradigm-shifting suggestion because they don't reject the environmental impact theory, proposing that rather than an asteroid, the environmental change could have been due to the explosion of massive volcanoes and the resulting dust in the atmosphere blocking out the sun and so on. Some scientists have replied in defense of the asteroid theory. But, it is a further reminder that every scientist before yesterday was wrong, at least to some extent. Is the instant death idea so fixed that if it wasn't an asteroid we need another cloudy explanation?

Ah, but everyone has in mind the Disney animated view of a herd of Brontosauruses grazing, then looking up curiously at the flaming meteor and its ensuing dust-cloud obscuring the sun then grimacing in terror (cartoon reptiles can fear in their eyes), then staggering, and plopping down dead (with a few farewell quivers from the tips of their tails, accompanied by ominous strains from the bass section).

So perhaps you can pick your favorite dust cloud. Whether or not any global dust-cloud can cause such extinctions (leaving countless species of all sorts, including reptiles, alive) is one of the debates that have accompanied the dust-cloud theory. But there probably has been a bit too much uncritical acceptance of the one-hit-killed-all (except those it didn't) theory.

Melodramatic global smudges provide a parable for what happened, since we certainly all know that the T. rex really is gone (unless it went under water to get away from the dust, and became the Loch Ness moster!), but may not be an accurate reflection of the actual day-to-day facts at that time deep in the mists of history.

Thursday, April 30, 2009

Tuesday, April 28, 2009

The Darwin parable?

By

Ken Weiss

Every human culture is embedded in stories about itself in the world, its lore, based on some accepted type of evidence. Science is a kind of lore about the world that is accepted by modern industrialized cultures.

It has long been pointed out that science is only temporary knowledge in the sense that, as we quoted in an earlier post, every scientist before yesterday has been wrong at least to some extent. As Galileo observed, rather than being true, sometimes the accepted wisdom is the opposite of the truth--he was referring to Aristotle, whose views had been assumed to be true for nearly 2000 years.

Scientific theory provides a kind of parable of the world, a simplified story. That's not the same as the exact truth. Here is a cogent bit of dialog from JP Shanley's play Doubt, referring to a parable the priest, Father Flynn, had used in a recent sermon:

Darwinian theory is like that. The idea that traits that are here today are here because they were better for their carriers' ancestors than what their peers had, is a tight, taut, and typically unfalsifiable kind of explanation. Since what is here is here because it worked and what did not work is not here, this becomes true by definition. It's a catch-all 'explanation'. It at least has the appearance of truth, even when some particular Darwnian explanation invokes some specific factor--often treated from Darwin's time to the present as a kind of 'Newtonian' force--that drove genetic change in the adaptive direction we see today.

That's the kind of scenario that's offered to account for the evolution of flight to capture prey, showy peacock feathers to attract mates, protective coloration to hide from predators, or why people live long enough to be grandmothers (to care for their genetic descendants). Some of these explanations may very well be factually true, but almost all could have other plausible explanations or are impossible to prove.

Simple, tight, irrefutable but unprovable stories like these are, to varying but unknown extent, parables rather than literal truth. Unfortunately, while science often (and often deservedly!) has little patience with pat religious parables that are invoked as literal truth, science often too blithely accepts its own theories as literal truth rather than parable.

We naturally have our own personal ideas about the nature of life, and we think (we believe) that they are generally true. They are sometimes different, as we try to outline in our book and in these postings, from what many others take for granted as truth. Strong darwinian selectionism and strong genetic determinism, in the ways we have discussed, are examples.

It may be difficult for people in any kind of culture, even modern technical culture, to be properly circumspect about their own truth-stories. Perhaps science must cling to theories too tight to be literally true, by dismissing the problems and inconsistencies that almost always are known. Accepted truths provide a working research framework, psychological safety in numbers, and the conformism needed to garner society's resources and power (here, among other things, in the form of grants, jobs, publications).

In fact, as a recent book by P. Kyle Stanford, Exceeding Our Grasp (Oxford Press, 2006) discusses at length, most theories including those in biology are under-determined: this means that many different theories, especially unconceived alternatives to current theory, could provide comparable fit to the existing data.

We can't know when and how such new ideas will arise and take their turn in the lore that is science. But in principle, at least, a bit more humility, a bit more recognition that our simple stories are more likely to be parable than perfect, would do us good.

Good parables do have semblance to plausibility and truth. Otherwise, they would not be useful parables. As we confront the nature of genomes, we see things that seem to fit our theoretical stories. In science as in other areas of human affairs, that fit is the lure, the drug, that draws us to extend our well-supported explanations to accept things as true that really are parable. We see this all the time, in the nature of much that is being said in genetics these days, as we have discussed.

Probably there's a parable about wisdom that could serve as a lesson in this regard. Maybe a commenter will remind us what it is!

It has long been pointed out that science is only temporary knowledge in the sense that, as we quoted in an earlier post, every scientist before yesterday has been wrong at least to some extent. As Galileo observed, rather than being true, sometimes the accepted wisdom is the opposite of the truth--he was referring to Aristotle, whose views had been assumed to be true for nearly 2000 years.

Scientific theory provides a kind of parable of the world, a simplified story. That's not the same as the exact truth. Here is a cogent bit of dialog from JP Shanley's play Doubt, referring to a parable the priest, Father Flynn, had used in a recent sermon:

Sister James: "Aren't the things that actually happen in life more worthy of interpretation than a made-up story?"

Father Flynn: "No. What actually happens in life is beyond interpretation. The truth makes for a bad sermon. It tends to be confusing and have no clear conclusion."

Darwinian theory is like that. The idea that traits that are here today are here because they were better for their carriers' ancestors than what their peers had, is a tight, taut, and typically unfalsifiable kind of explanation. Since what is here is here because it worked and what did not work is not here, this becomes true by definition. It's a catch-all 'explanation'. It at least has the appearance of truth, even when some particular Darwnian explanation invokes some specific factor--often treated from Darwin's time to the present as a kind of 'Newtonian' force--that drove genetic change in the adaptive direction we see today.

That's the kind of scenario that's offered to account for the evolution of flight to capture prey, showy peacock feathers to attract mates, protective coloration to hide from predators, or why people live long enough to be grandmothers (to care for their genetic descendants). Some of these explanations may very well be factually true, but almost all could have other plausible explanations or are impossible to prove.

Simple, tight, irrefutable but unprovable stories like these are, to varying but unknown extent, parables rather than literal truth. Unfortunately, while science often (and often deservedly!) has little patience with pat religious parables that are invoked as literal truth, science often too blithely accepts its own theories as literal truth rather than parable.

We naturally have our own personal ideas about the nature of life, and we think (we believe) that they are generally true. They are sometimes different, as we try to outline in our book and in these postings, from what many others take for granted as truth. Strong darwinian selectionism and strong genetic determinism, in the ways we have discussed, are examples.

It may be difficult for people in any kind of culture, even modern technical culture, to be properly circumspect about their own truth-stories. Perhaps science must cling to theories too tight to be literally true, by dismissing the problems and inconsistencies that almost always are known. Accepted truths provide a working research framework, psychological safety in numbers, and the conformism needed to garner society's resources and power (here, among other things, in the form of grants, jobs, publications).

In fact, as a recent book by P. Kyle Stanford, Exceeding Our Grasp (Oxford Press, 2006) discusses at length, most theories including those in biology are under-determined: this means that many different theories, especially unconceived alternatives to current theory, could provide comparable fit to the existing data.

We can't know when and how such new ideas will arise and take their turn in the lore that is science. But in principle, at least, a bit more humility, a bit more recognition that our simple stories are more likely to be parable than perfect, would do us good.

Good parables do have semblance to plausibility and truth. Otherwise, they would not be useful parables. As we confront the nature of genomes, we see things that seem to fit our theoretical stories. In science as in other areas of human affairs, that fit is the lure, the drug, that draws us to extend our well-supported explanations to accept things as true that really are parable. We see this all the time, in the nature of much that is being said in genetics these days, as we have discussed.

Probably there's a parable about wisdom that could serve as a lesson in this regard. Maybe a commenter will remind us what it is!

Monday, April 27, 2009

Genetic perceptions and(/or) illusions?

By

Ken Weiss

If as we tend to think, genes are not as strongly deterministic as seems often to be argued, then why do humans always give birth to humans, and voles to voles? Isn't the genome all-important, and therefore doesn't it have to be a blueprint (or, in more current terms, a computer program) for the organism? Even the other ingredients in the fertilized egg are often to a great extent dismissed in importance, because at some stage they are determined by genes (for example, in the mother when she produces the egg cell).

It's true that most people enter this world with the same basic set of parts, even if each part varies among people. But, in many senses the person is not predictable by his or her genome. You get this disease, other people get that one. You are athletic, others are not. You can do math, others can do metalwork. And so on, but most often a genetic predisposition for these traits can't be found.

We have made a number of recent posts about that genotype to phenotype connection problem. You generally resemble your relatives, which must be at least partly for genetic reasons, but the idea of predicting much more than that about your specific life from your specific genome is not working out very well, except vaguely or, it is certainly true, for a number of genetic variants with strong effects, that are often rare or pathological. In the latter cases are the genetic causes of diseases like muscular dystrophy or cystic fibrosis.

But these facts seem inconsistent! How can your genome determine whether you are an oak, a rabbit, or a person....and yet not determine whether you'll be a musician, get cancer, or win a Nobel prize? Is the idea of genetic control an illusion?

Yes and no. Part of the explanation has to do with what we refer to in our book as 'inheritance with memory'. Genomes acquire mutations and, if they aren't lethal, they are faithfully transmitted from parent to offspring so that some people have blue eyes and some have brown, some have freckles and others don't.

Over time, differences accumulate in genomes, and with isolation of one kind or another, lineages diverge into different species, and then differences continue to build up over evolutionary time. When millions of years later you compare a rabbit to a person (much less to a maple!), so much genetic variation has accumulated that there is clearly no confusing these different organisms. The genes, and the result of their action are unmistakable.

What happens at each step during development of an embryo reflects the cumulative effect of many genes, and is contingent on what has already happened. Thus, step by step by step in a developing rabbit embryo the rabbit foundation gets laid and everything that happens next basically depends on getting more rabbit instructions, because, except for genes that are very similar (conserved) across species, they are the only instructions that the cells in the embryo can receive.

Variation is tolerated but within limits--some rabbits have very floppy ears and some don't, but none have elephant ears or a rack of antlers (as in the jackalope pictured here) or can survive a mutation that gives it, say, a malfunctioning heart. So, you get a continuum of rabbit types, but because of how development works, a newborn rabbit can't veer very far from 'rabbitness'.

The same is true for humans--among those whose ancestors were separated on different continents, during thousands of generations, genetic variation arose and accumulated in these populations, and it's often possible to tell from a genome where a person's ancestors lived. That is, that person's genome's geographic ancestry.

When the subject turns to variation within a species or a population, however, the scale of variation that we are studying greatly changes. Now we are trying to identify genetic variation that, while still compatible with its species and population, and with successful embryological development, contributes to trait variation. Relative to species differences, such variation is usually very slight. But, it happens because, within limits, biology is imprecise and a certain amount of sloppiness (mutation, in this case) is compatible with life. In fact, it drives evolution.

What does this mean about the genetics of disease, or other traits that are often of interest to researchers, like behavior, artistic ability, intelligence, etc.? Culturally, we may make much of these differences, such as who can play shortstop and who can't. But often, these are traits that are not all that far from average or only are manifest after decades of life (e.g., even 'early onset' heart or Alzheimer disease means onset in one's 50s). Without even considering the effects of the environment, it is no surprise, and no illusion, that it is difficult to identify the generally small differences in gene performance that are responsible.

Of course, like a machine, there are many ways in which a broken part can break the whole machine, so that within any species there are many ways in which mutations that have major effects on some gene can have major effects on the organism. Mostly, those are lethal or present early in life. And, many mutations probably happen in the developing egg or sperm rendering that cell unable to survive--that's prezygotic selection. But even serious diseases are small relative to the fact that a person with huntington's disease or cancer is, first and foremost, a person.

So, there may seem to be an inconsistency between the difficulty of finding genetic causes of variation among humans, and the obvious fact that genetic variance is responsible for our development and differences from other species. A major explanation is the scale of difference one is thinking about. Just because genes clearly and definitively determine the difference between you and a maple tree--and it's easy to identify the genes that contribute to that difference--that does not mean that the genetic basis of the trait differences between you and anyone else is going to be easy to identify. Or between a red maple and a sugar maple. Or that genetic variation alone is going to explain your disease risk or particular skills.

And there's another point. In biomedical genetics we are drawing samples from billions of people, whose diseases come to the attention of specialty clinics around the developed world, and hence are reported, included in data bases, and are put under the genetic microscope for examination. This means that we systematically identify the very rarest, most aberrant genotypes in our species. This can greatly exaggerate the amount of genetically driven variation in humans compared to most, if not all, other species.

However, it must be said that even the instances of other species (such as a hundred or so standard lines of inbred mice, or perhaps a few thousand lines of fruit flies or Arabidopsis plants (as in the drawing), from which much of our knowledge of genetic variation is derived), finds similar mixes of genetic simplicity and complexity. In other words, one does not need a sample space of hundreds of millions to encounter the difficulty of trying to predict phenotypes from genotypes.

So while this is true, it's also true that some variation in human traits is controlled by single genes, and those behave at least to some extent like the classical genetic traits that Mendel studied in peas. This variation arises in exactly the same way variant genes with small effects that contribute to polygenic traits arises--by mutation. But, the effects of mutation follow a distribution from very small to very large, and the genetic variants affecting the extreme are easier to identify. Some common variants of this kind do exist, because life is a mix of whatever evolution happened to produce. But complex traits remain, for understandable evolutionary reasons, complex.

It's true that most people enter this world with the same basic set of parts, even if each part varies among people. But, in many senses the person is not predictable by his or her genome. You get this disease, other people get that one. You are athletic, others are not. You can do math, others can do metalwork. And so on, but most often a genetic predisposition for these traits can't be found.

We have made a number of recent posts about that genotype to phenotype connection problem. You generally resemble your relatives, which must be at least partly for genetic reasons, but the idea of predicting much more than that about your specific life from your specific genome is not working out very well, except vaguely or, it is certainly true, for a number of genetic variants with strong effects, that are often rare or pathological. In the latter cases are the genetic causes of diseases like muscular dystrophy or cystic fibrosis.

But these facts seem inconsistent! How can your genome determine whether you are an oak, a rabbit, or a person....and yet not determine whether you'll be a musician, get cancer, or win a Nobel prize? Is the idea of genetic control an illusion?

Yes and no. Part of the explanation has to do with what we refer to in our book as 'inheritance with memory'. Genomes acquire mutations and, if they aren't lethal, they are faithfully transmitted from parent to offspring so that some people have blue eyes and some have brown, some have freckles and others don't.

Over time, differences accumulate in genomes, and with isolation of one kind or another, lineages diverge into different species, and then differences continue to build up over evolutionary time. When millions of years later you compare a rabbit to a person (much less to a maple!), so much genetic variation has accumulated that there is clearly no confusing these different organisms. The genes, and the result of their action are unmistakable.

| |||

Variation is tolerated but within limits--some rabbits have very floppy ears and some don't, but none have elephant ears or a rack of antlers (as in the jackalope pictured here) or can survive a mutation that gives it, say, a malfunctioning heart. So, you get a continuum of rabbit types, but because of how development works, a newborn rabbit can't veer very far from 'rabbitness'.

The same is true for humans--among those whose ancestors were separated on different continents, during thousands of generations, genetic variation arose and accumulated in these populations, and it's often possible to tell from a genome where a person's ancestors lived. That is, that person's genome's geographic ancestry.

When the subject turns to variation within a species or a population, however, the scale of variation that we are studying greatly changes. Now we are trying to identify genetic variation that, while still compatible with its species and population, and with successful embryological development, contributes to trait variation. Relative to species differences, such variation is usually very slight. But, it happens because, within limits, biology is imprecise and a certain amount of sloppiness (mutation, in this case) is compatible with life. In fact, it drives evolution.

What does this mean about the genetics of disease, or other traits that are often of interest to researchers, like behavior, artistic ability, intelligence, etc.? Culturally, we may make much of these differences, such as who can play shortstop and who can't. But often, these are traits that are not all that far from average or only are manifest after decades of life (e.g., even 'early onset' heart or Alzheimer disease means onset in one's 50s). Without even considering the effects of the environment, it is no surprise, and no illusion, that it is difficult to identify the generally small differences in gene performance that are responsible.

Of course, like a machine, there are many ways in which a broken part can break the whole machine, so that within any species there are many ways in which mutations that have major effects on some gene can have major effects on the organism. Mostly, those are lethal or present early in life. And, many mutations probably happen in the developing egg or sperm rendering that cell unable to survive--that's prezygotic selection. But even serious diseases are small relative to the fact that a person with huntington's disease or cancer is, first and foremost, a person.

|

| Weiss and Buchanan, 2009 |

And there's another point. In biomedical genetics we are drawing samples from billions of people, whose diseases come to the attention of specialty clinics around the developed world, and hence are reported, included in data bases, and are put under the genetic microscope for examination. This means that we systematically identify the very rarest, most aberrant genotypes in our species. This can greatly exaggerate the amount of genetically driven variation in humans compared to most, if not all, other species.

However, it must be said that even the instances of other species (such as a hundred or so standard lines of inbred mice, or perhaps a few thousand lines of fruit flies or Arabidopsis plants (as in the drawing), from which much of our knowledge of genetic variation is derived), finds similar mixes of genetic simplicity and complexity. In other words, one does not need a sample space of hundreds of millions to encounter the difficulty of trying to predict phenotypes from genotypes.

So while this is true, it's also true that some variation in human traits is controlled by single genes, and those behave at least to some extent like the classical genetic traits that Mendel studied in peas. This variation arises in exactly the same way variant genes with small effects that contribute to polygenic traits arises--by mutation. But, the effects of mutation follow a distribution from very small to very large, and the genetic variants affecting the extreme are easier to identify. Some common variants of this kind do exist, because life is a mix of whatever evolution happened to produce. But complex traits remain, for understandable evolutionary reasons, complex.

The Science Factory

An op/ed piece called "End the university as we know it" in today's New York Times , by Mark Taylor, a professor of religion at Columbia, has caught people's attention. As it should. He describes a system in which, among other problems, universities train far too many graduate students for the number of available academic jobs, because they need them to work in their labs and teach their classes. While this is certainly true, we would like to add a few more points.

Research universities have become institutions dependent on money brought in by research faculty to cover basic operating expenses, and this naturally leads to emphasis on those aspects of faculty performance. This means that promotions, raises and tenure, not to mention our natural human vanities, depend substantially if not centrally upon research rather than pedagogical prowess. In that sense, universities have become welfare systems, only the faculty are the sales force as well as the protected beneficiaries.

Things differ among fields, however. In the humanities, that even university presidents recognize have at least some value and can't totally be abolished, publication is the coin of the realm. It is not new to say that most of what is published is rarely read by anyone (if you doubt this, check it for yourself--it's true even in the sciences!), yet this research-effort usually comes at the expense of teaching students.

In the humanities, where little money is at stake and work remains mainly at the individual level, students are still largely free to pick the dissertation topic that interests them. But their jobs afterwards are generally to replace their mentors in the system.

In the sciences there are many more jobs, both academically and in industry and the public sector. We've been very lucky in that regard in genetics. But at the same time, science is more of an industrial operation, depending on serfs at the bench who are assigned to do a professor's bidding--work on his/her grant-based projects. There is much less freedom to explore new ideas or the students' own interests. They then go on to further holding areas, called post-docs, before hopefully replacing aging professors or finding a job in industry.

In both cases, however, education is taking an increasing back seat at research universities and even good liberal arts colleges. Lecturers and graduate students teach classes, rather than professors, and there is pressure to develop money-making online courses that are rarely as good as good-old person to person contact. The system has become decidedly lopsided.

Taylor identifies very real problems, but to us, his solutions (eliminate departments and tenure, and train fewer students, among other things) don't address the real root of the problem. As long as universities are so reliant upon overhead money from grants, which they will be for the foreseeable future, universities can't return to education as their first priority.

So long as this is based on a competitive market worldview, as it is today, the growth ethic dominates. One does whatever we need to do to get more. Exponential growth expectations were largely satisfied during the boom time, starting around 40 years ago, as universities expanded (partly because research funding did, enabling faculty to be paid on grants rather than tuition money). Science industries grew. Status for a professor was to train many graduate students. We ourselves have been great beneficiaries of this system!

Understanding the current situation falls well within the topical expertise of anthropology. One class, the professoriate, used the system to expand its power and access to resources. Whether by design or not, a class of subordinates developed. A pyramid of research hierarchy grew, with concentration of resources in larger and wealthier groups, labs, or projects. The peer review system designed to prevent self-perpetuating old-boy networks has been to a considerable extent coopted by the new old boys.

As everyone knows, or should know, exponential growth is not sustainable. If each of us trains many students, and then they do the same, etc., we eventually saturate the system, and that is at least temporarily what is happening now.

What can be done about it, or should be done about it, are not clear. The idea that we'll somehow intentionally change to what Taylor wants seems very unanthropological: we simply don't change in that way as a rule. Cultural systems, of which academia is one, change by evolution in ways that are usually not predictable. At present, there too many vested interests (such as ourselves, protecting our jobs and privileges, etc.). Something will change, but it will be most humane if it's gradual rather than chaotic. Maybe, whether in the ways Taylor suggests or in others, a transition can be undertaken that does not require the system to collapse first.

We'll all just have to stay tuned....

Research universities have become institutions dependent on money brought in by research faculty to cover basic operating expenses, and this naturally leads to emphasis on those aspects of faculty performance. This means that promotions, raises and tenure, not to mention our natural human vanities, depend substantially if not centrally upon research rather than pedagogical prowess. In that sense, universities have become welfare systems, only the faculty are the sales force as well as the protected beneficiaries.

Things differ among fields, however. In the humanities, that even university presidents recognize have at least some value and can't totally be abolished, publication is the coin of the realm. It is not new to say that most of what is published is rarely read by anyone (if you doubt this, check it for yourself--it's true even in the sciences!), yet this research-effort usually comes at the expense of teaching students.

In the humanities, where little money is at stake and work remains mainly at the individual level, students are still largely free to pick the dissertation topic that interests them. But their jobs afterwards are generally to replace their mentors in the system.

In the sciences there are many more jobs, both academically and in industry and the public sector. We've been very lucky in that regard in genetics. But at the same time, science is more of an industrial operation, depending on serfs at the bench who are assigned to do a professor's bidding--work on his/her grant-based projects. There is much less freedom to explore new ideas or the students' own interests. They then go on to further holding areas, called post-docs, before hopefully replacing aging professors or finding a job in industry.

In both cases, however, education is taking an increasing back seat at research universities and even good liberal arts colleges. Lecturers and graduate students teach classes, rather than professors, and there is pressure to develop money-making online courses that are rarely as good as good-old person to person contact. The system has become decidedly lopsided.

Taylor identifies very real problems, but to us, his solutions (eliminate departments and tenure, and train fewer students, among other things) don't address the real root of the problem. As long as universities are so reliant upon overhead money from grants, which they will be for the foreseeable future, universities can't return to education as their first priority.

So long as this is based on a competitive market worldview, as it is today, the growth ethic dominates. One does whatever we need to do to get more. Exponential growth expectations were largely satisfied during the boom time, starting around 40 years ago, as universities expanded (partly because research funding did, enabling faculty to be paid on grants rather than tuition money). Science industries grew. Status for a professor was to train many graduate students. We ourselves have been great beneficiaries of this system!

Understanding the current situation falls well within the topical expertise of anthropology. One class, the professoriate, used the system to expand its power and access to resources. Whether by design or not, a class of subordinates developed. A pyramid of research hierarchy grew, with concentration of resources in larger and wealthier groups, labs, or projects. The peer review system designed to prevent self-perpetuating old-boy networks has been to a considerable extent coopted by the new old boys.

As everyone knows, or should know, exponential growth is not sustainable. If each of us trains many students, and then they do the same, etc., we eventually saturate the system, and that is at least temporarily what is happening now.

What can be done about it, or should be done about it, are not clear. The idea that we'll somehow intentionally change to what Taylor wants seems very unanthropological: we simply don't change in that way as a rule. Cultural systems, of which academia is one, change by evolution in ways that are usually not predictable. At present, there too many vested interests (such as ourselves, protecting our jobs and privileges, etc.). Something will change, but it will be most humane if it's gradual rather than chaotic. Maybe, whether in the ways Taylor suggests or in others, a transition can be undertaken that does not require the system to collapse first.

We'll all just have to stay tuned....

It would never work out

More from our Humor Editor

http://news.yahoo.com/comics/speedbump;_ylt=ArrJ4pWQkHmu.kjkHYe7I_MK_b4F

Friday, April 24, 2009

Prove me wrong

Sun Apr 19, 12:00 AM ET

http://d.yimg.com/a/p/uc/20090419/lft090419.gif

Thank you, Jennifer, once again!

http://d.yimg.com/a/p/uc/20090419/lft090419.gif

Thank you, Jennifer, once again!

Thursday, April 23, 2009

Who doesn't 'believe' in genes? Who does believe in miracles?

By

Ken Weiss

Disputes in science, as in any other field, can become polarizing and accusatory. Skepticism is almost by definition the term applied, usually in a denigrating way, to a minority view. Minority views are often if not usually wrong, but history shows that majority views can be similarly flawed (as we mentioned in our post of April 6). Indeed, major scientific progress occurs specifically when the majority view is shown to be incorrect in important ways.

It is sometimes said, or implied, about those who doubt some of the statements made about mapping complex traits by GWAS (see previous posts) and other methods, that the skeptics 'don't even believe in genes!' The word 'believe' naturally comes to the tongue when characterization of heretics is afoot, and reflects an important aspect of majority views (including the current basic theory in any science): they are belief systems.

majority views (including the current basic theory in any science): they are belief systems.

In genetics, we know of nobody who doesn't 'believe' in genes. The question is not one of devil-worship by witches like those Macbeth met on a Scottish heath. The question is about what, where, and how genes work and how that is manifest in traits we in the life sciences try to understand. Not to believe in genes would be something akin to not believing in molecules, or heat.

In the case of human genetics, genes associated with and/or responsible for hundreds of traits including countless diseases, have been identified (you can easily read about them in OMIM and elsewhere). Many of these are clearly understandable as the effects, sometimes direct effects, of variation in specific genes.

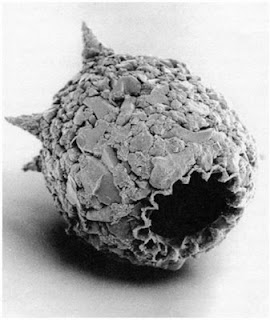

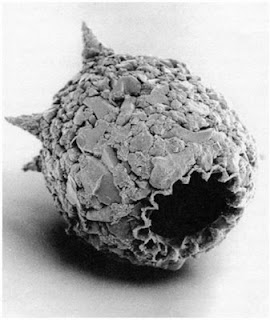

In some examples, like cystic fibrosis, almost every case of a trait or disease is due to variation in the same gene. In others, such as hereditary deafness, different affected people or families are affected because of the effects of different genes, but it appears that the causal variants are so rare in the population that in each family deafness is only one of them. This is called multiple unilocus causation, and many different genes have been found for such traits (as in the deafness case shown).

same gene. In others, such as hereditary deafness, different affected people or families are affected because of the effects of different genes, but it appears that the causal variants are so rare in the population that in each family deafness is only one of them. This is called multiple unilocus causation, and many different genes have been found for such traits (as in the deafness case shown).

Even for more complex traits like, say, diabetes or various forms of cancer, variation at some genes has strong enough effect that standard methods of gene-hunting were able to find them (and, yes, GWAS can find them, too!). BRCA1 and its association with risk of breast cancer is a classic instance of that. But often the results can't be replicated in other studies or populations, or even different families, a problem that has been much discussed in the human genetics literature, including papers written by us.

So, what is at issue these days is not whether genes exist, are important, or are worthy of study (and the same applies to human disease, or studies of yeast, bacteria, insects, flowering plants, or whatever you're interested in). Instead, what is at issue is how genes work in the context of complex traits that involve many interacting genetic and environmental factors.

And in addition to the basic understanding of how genes work in this context (and, incidentally, the rapidly expanding senses of DNA functions besides the usual concept of what a 'gene' is), is the question of how to find the genes and their effects, and what kinds of information that may provide in regard to applications in agriculture or human health.

In the latter case especially, the promise has been that we can predict your health from your DNA. Widely publicized companies are selling this idea in various ways from customer-submitted DNA samples, and some medical geneticists are promising personalized medicine in glowing terms, too.

Indeed, there has long been an effective profession dedicated to this general problem. It's called genetic counseling. Genetic counselors work in a monitored setting, with standardized approaches and ethical procedures in dealing with clients. They systematically collect appropriate clinical and other information. And for known genetic variation associated with disease they, or knowledgeable physicians, can make useful, personalized predictions, explaining options for treatment, family planning, and so on.

It is not a lack of 'belief' in genes, but the opposite--an understanding of genetics--that leads some scientists to question the likely efficacy of this or that proposed direction in health-related research, or in other areas such as criteria for developing evolutionary explanations (i.e., scenarios for past natural selection) for various traits including complex traits like diabetes and even social behavior.

Dispute in science should not be viewed or characterized as if it were the same as dispute in religion....even though both are similar cultural phenomena that often center around accepted theory or dogma. Questions about priorities and dramatic promises for scientific approaches are legitimate and all dogma should be questioned.

Every biologist we know 'believes' in genes. But not all biologists believe in miracles!

It is sometimes said, or implied, about those who doubt some of the statements made about mapping complex traits by GWAS (see previous posts) and other methods, that the skeptics 'don't even believe in genes!' The word 'believe' naturally comes to the tongue when characterization of heretics is afoot, and reflects an important aspect of

majority views (including the current basic theory in any science): they are belief systems.

majority views (including the current basic theory in any science): they are belief systems.In genetics, we know of nobody who doesn't 'believe' in genes. The question is not one of devil-worship by witches like those Macbeth met on a Scottish heath. The question is about what, where, and how genes work and how that is manifest in traits we in the life sciences try to understand. Not to believe in genes would be something akin to not believing in molecules, or heat.

In the case of human genetics, genes associated with and/or responsible for hundreds of traits including countless diseases, have been identified (you can easily read about them in OMIM and elsewhere). Many of these are clearly understandable as the effects, sometimes direct effects, of variation in specific genes.

In some examples, like cystic fibrosis, almost every case of a trait or disease is due to variation in the

same gene. In others, such as hereditary deafness, different affected people or families are affected because of the effects of different genes, but it appears that the causal variants are so rare in the population that in each family deafness is only one of them. This is called multiple unilocus causation, and many different genes have been found for such traits (as in the deafness case shown).

same gene. In others, such as hereditary deafness, different affected people or families are affected because of the effects of different genes, but it appears that the causal variants are so rare in the population that in each family deafness is only one of them. This is called multiple unilocus causation, and many different genes have been found for such traits (as in the deafness case shown).Even for more complex traits like, say, diabetes or various forms of cancer, variation at some genes has strong enough effect that standard methods of gene-hunting were able to find them (and, yes, GWAS can find them, too!). BRCA1 and its association with risk of breast cancer is a classic instance of that. But often the results can't be replicated in other studies or populations, or even different families, a problem that has been much discussed in the human genetics literature, including papers written by us.

So, what is at issue these days is not whether genes exist, are important, or are worthy of study (and the same applies to human disease, or studies of yeast, bacteria, insects, flowering plants, or whatever you're interested in). Instead, what is at issue is how genes work in the context of complex traits that involve many interacting genetic and environmental factors.

And in addition to the basic understanding of how genes work in this context (and, incidentally, the rapidly expanding senses of DNA functions besides the usual concept of what a 'gene' is), is the question of how to find the genes and their effects, and what kinds of information that may provide in regard to applications in agriculture or human health.

In the latter case especially, the promise has been that we can predict your health from your DNA. Widely publicized companies are selling this idea in various ways from customer-submitted DNA samples, and some medical geneticists are promising personalized medicine in glowing terms, too.

Indeed, there has long been an effective profession dedicated to this general problem. It's called genetic counseling. Genetic counselors work in a monitored setting, with standardized approaches and ethical procedures in dealing with clients. They systematically collect appropriate clinical and other information. And for known genetic variation associated with disease they, or knowledgeable physicians, can make useful, personalized predictions, explaining options for treatment, family planning, and so on.

It is not a lack of 'belief' in genes, but the opposite--an understanding of genetics--that leads some scientists to question the likely efficacy of this or that proposed direction in health-related research, or in other areas such as criteria for developing evolutionary explanations (i.e., scenarios for past natural selection) for various traits including complex traits like diabetes and even social behavior.

Dispute in science should not be viewed or characterized as if it were the same as dispute in religion....even though both are similar cultural phenomena that often center around accepted theory or dogma. Questions about priorities and dramatic promises for scientific approaches are legitimate and all dogma should be questioned.

Every biologist we know 'believes' in genes. But not all biologists believe in miracles!

Tuesday, April 21, 2009

GWAS revisited: vanishing returns at expanding costs?

We've now had a chance to read the 4 papers on genomewide association studies (GWAS) in the New England Journal of Medicine last week, and we'd like to make a few additional comments. Basically, we think the impression left by the science commentary in the New York Times that GWAS are being seriously questioned by heretofore strong adherents was misleading. Yes, the authors do suggest that all the answers are not in yet, but they are still believers in the genetic approach to common, complex disease.

David Goldstein (whose paper can be found here) makes the point that SNPs (single nucleotide polymorphisms, or genetic variants) with major disease effects have probably been found by now, and it's true that they don't explain much of the heritability (evidence of overall inherited risk) of most diseases or traits. He believes that further discoveries using GWAS will generally be of very minor effects. He concludes that GWAS have been very successful in detecting the most common variants, but now have reached the point of diminishing returns. He says that "rarer variants will explain missing heritability", and these can't be identified by GWAS, so human genetics now needs to turn to sequencing whole genomes to find these.

Joel Hirschhorn (you can find his paper here) states that the main goal of GWAS has never been disease prediction, which indeed they've only had modest success with, but rather the discovery of biologic pathways underlying polygenic disease or traits. GWAS have been very successful at this--that is, they've confirmed that drugs already in use are, as was basically also known, targeting pathways that are indeed related to the relevant disease, although he says that further discoveries are underway. Unlike Goldstein, he believes that larger GWAS will find significant rare variants associated with disease.

Peter Kraft and David Hunter (here) tout the "wave of discoveries" that have resulted from GWAS. They do say that by and large these discoveries have low discriminatory ability and predictive power, but believe that further studies of the same type (only much bigger) will find additional risk loci that will help explain polygenic disease and yield good estimates of risk. They suggest that, because of findings from GWAS, physicians will be able to predict individual risk for their patients in 2 to 3 years.

John Hardy and Andrew Singleton (here) describe the GWAS method and point out that people are surprised to learn that it's often just chromosome regions that this method finds, not specific genes, and that some of these are probably not protein-coding regions but rather have to do with regulating gene expression. Notably, unlike the other authors who all state that the "skeptics were wrong", but somehow don't bother to cite their work so that the reader could check that claim (they do cite the Terwilliger and Hiekkalinna paper we mentioned here last week, but that is on a specialized technical issue, not the basic issues related to health effects).

They also state that the idea of gene by environment interaction is a cliche, and has never been demonstrated. Whether they mean by this that there is no environmental effect on risk or simply that it's difficult to quantify (or, a technical point, that environmental effects are additive) is not clear. If the former, that's patently false--even risk of breast cancer in women who do carry the known and undoubted BRCA1 or BRCA2 risk alleles, varies significantly by decade of birth. Or the huge rise in diabetes, obesity, asthma, autism, ADHD, various cancers, and many other diseases just during the memory of at least some living scientists who care to pay attention. And, see our post of 4/18.

So, we find none of the supposed general skepticism here. Yes, these papers do acknowledge that risk explained by GWAS has been low, but they claim this as 'victory' for the method, and dismiss, minimize, or (worse) misrepresent problems that were raised long ago, and instead say either that risk will be explained with bigger studies, or GWAS weren't meant to explain risk in the first place (it's not clear that the non-skeptics agree with each other about the aim of GWAS, or about whether they have now served their purpose and it's time to move on--to methods that apparently actually do or also do explain heritability and predict risk.)

The 'skeptics' never said that GWAS would find nothing. What at least some of us said was that what would be found would be some risk factors, but that complex traits could not by and large be dissected into the set of their genetic causes in this way.

Rather than face these realities, we feel that what is being done now is to turn defeat into victory by claiming that ever-larger efforts will finally solve the problem. We think that is mythical. Unstable and hardly-estimable small, probabilistic relative risks will not lead to revolutionary 'personalized medicine', and there are other and better ways to find pathways. If a pathway (network of interacting genes) is so important, it should have at least some alleles that are common and major enough that they should already be known (or could easily be known from, say, mouse experiments); once one member is known, experimental methods exist to find its interacting gene partners.

In a way it's also a sad consequence of ethnocentric thinking to suppose that because we can't find major risk alleles in mainstream samples from industrialized populations, that such undiscovered alleles might not exist in, or even be largely confined to, smaller or more isolated populations, where they could be quite important to public health. They do and, ironically, mapping methods (a technical point: especially linkage mapping) can be a good way to find them.

But if we're in an era of diminishing, if not vanishing, returns, we're also in an era in which we think we will not only get less, but will have to spend and hence sequester much more research resources to get it. So there are societal as well as scientific issues at stake.

In any case, we already have strong, pathway-based personalized medicine! By far the major risk factor for most diabetes, for example, involves energy and fat metabolic pathways. Individuals at risk can already target those pathways in simple, direct ways: walk rather than taking the elevator, and don't over-eat!

If those pathways were addressed in this way, there would actually be major public impact, and ironically, what would remain would be a residuum of cases that really are genetic in the meaningful sense, and they would be more isolated and easier to study in appropriate genetic, genomic, and experimental ways.

David Goldstein (whose paper can be found here) makes the point that SNPs (single nucleotide polymorphisms, or genetic variants) with major disease effects have probably been found by now, and it's true that they don't explain much of the heritability (evidence of overall inherited risk) of most diseases or traits. He believes that further discoveries using GWAS will generally be of very minor effects. He concludes that GWAS have been very successful in detecting the most common variants, but now have reached the point of diminishing returns. He says that "rarer variants will explain missing heritability", and these can't be identified by GWAS, so human genetics now needs to turn to sequencing whole genomes to find these.

Joel Hirschhorn (you can find his paper here) states that the main goal of GWAS has never been disease prediction, which indeed they've only had modest success with, but rather the discovery of biologic pathways underlying polygenic disease or traits. GWAS have been very successful at this--that is, they've confirmed that drugs already in use are, as was basically also known, targeting pathways that are indeed related to the relevant disease, although he says that further discoveries are underway. Unlike Goldstein, he believes that larger GWAS will find significant rare variants associated with disease.

Peter Kraft and David Hunter (here) tout the "wave of discoveries" that have resulted from GWAS. They do say that by and large these discoveries have low discriminatory ability and predictive power, but believe that further studies of the same type (only much bigger) will find additional risk loci that will help explain polygenic disease and yield good estimates of risk. They suggest that, because of findings from GWAS, physicians will be able to predict individual risk for their patients in 2 to 3 years.

John Hardy and Andrew Singleton (here) describe the GWAS method and point out that people are surprised to learn that it's often just chromosome regions that this method finds, not specific genes, and that some of these are probably not protein-coding regions but rather have to do with regulating gene expression. Notably, unlike the other authors who all state that the "skeptics were wrong", but somehow don't bother to cite their work so that the reader could check that claim (they do cite the Terwilliger and Hiekkalinna paper we mentioned here last week, but that is on a specialized technical issue, not the basic issues related to health effects).

They also state that the idea of gene by environment interaction is a cliche, and has never been demonstrated. Whether they mean by this that there is no environmental effect on risk or simply that it's difficult to quantify (or, a technical point, that environmental effects are additive) is not clear. If the former, that's patently false--even risk of breast cancer in women who do carry the known and undoubted BRCA1 or BRCA2 risk alleles, varies significantly by decade of birth. Or the huge rise in diabetes, obesity, asthma, autism, ADHD, various cancers, and many other diseases just during the memory of at least some living scientists who care to pay attention. And, see our post of 4/18.

So, we find none of the supposed general skepticism here. Yes, these papers do acknowledge that risk explained by GWAS has been low, but they claim this as 'victory' for the method, and dismiss, minimize, or (worse) misrepresent problems that were raised long ago, and instead say either that risk will be explained with bigger studies, or GWAS weren't meant to explain risk in the first place (it's not clear that the non-skeptics agree with each other about the aim of GWAS, or about whether they have now served their purpose and it's time to move on--to methods that apparently actually do or also do explain heritability and predict risk.)

The 'skeptics' never said that GWAS would find nothing. What at least some of us said was that what would be found would be some risk factors, but that complex traits could not by and large be dissected into the set of their genetic causes in this way.

Rather than face these realities, we feel that what is being done now is to turn defeat into victory by claiming that ever-larger efforts will finally solve the problem. We think that is mythical. Unstable and hardly-estimable small, probabilistic relative risks will not lead to revolutionary 'personalized medicine', and there are other and better ways to find pathways. If a pathway (network of interacting genes) is so important, it should have at least some alleles that are common and major enough that they should already be known (or could easily be known from, say, mouse experiments); once one member is known, experimental methods exist to find its interacting gene partners.

In a way it's also a sad consequence of ethnocentric thinking to suppose that because we can't find major risk alleles in mainstream samples from industrialized populations, that such undiscovered alleles might not exist in, or even be largely confined to, smaller or more isolated populations, where they could be quite important to public health. They do and, ironically, mapping methods (a technical point: especially linkage mapping) can be a good way to find them.

But if we're in an era of diminishing, if not vanishing, returns, we're also in an era in which we think we will not only get less, but will have to spend and hence sequester much more research resources to get it. So there are societal as well as scientific issues at stake.

In any case, we already have strong, pathway-based personalized medicine! By far the major risk factor for most diabetes, for example, involves energy and fat metabolic pathways. Individuals at risk can already target those pathways in simple, direct ways: walk rather than taking the elevator, and don't over-eat!

If those pathways were addressed in this way, there would actually be major public impact, and ironically, what would remain would be a residuum of cases that really are genetic in the meaningful sense, and they would be more isolated and easier to study in appropriate genetic, genomic, and experimental ways.

Monday, April 20, 2009

Doubt

"Have you ever held a position in an argument past the point of comfort....given service to a creed you no longer utterly believed?" So asks John Patrick Shaney in the preface to his 2004 play Doubt. It's a very good play (and equally good new movie of the same name), about nuances and our tendency to fail to acknowledge how little we actually know. Did the priest...or didn't he?

Our society is currently commodified, dumbed-down, and rewards self-assurance, assertion, and extreme advocacy--belief. It's reflected in the cable 'news' shouting contests, and the fast-paced media orientation that pervades many areas of our society. There are penalties for circumspection.

Science is part of society and shares its motifs. Scientists are driven by natural human vanities, of course, but also by the fact that as middle-class workers we need salaries, pension funds, and health care coverage. We are not totally disinterested parties, standing by and abstractly watching knowledge of the world accumulate. Academic work is viewed as job-training for students, and research is largely filtered through the lens of practical 'importance'. Understanding Nature for its own sake is less valued than being able to manipulate Nature for gain. This was less prominent in times past (Darwin's time, for example; though at the height of industrialization and empire, when there was certainly great respect for practical science and engineering, he could afford the luxury of pure science).

It is difficult to have a measured discussion about scientific issues--stem cells are a prime example, but equally difficult are discussions about the nature of genomes and what they do, and the nature of life and evolution. Our societal modus vivendi imposes subtle, unstated pressure to take a stand and build a fortress around it. Speakers get invited to give talks based on what people think they're going to say (often, speaking to the already-converted). The hardest sentence to utter is "I don't know." (Another, "I was wrong," is impossible, so it isn't even on the radar).

A theme of our recent postings has to do with what we actually know in science, and what we don't know. It is always true that scientists firmly cling to their theoretical worldview--their paradigm, to use Thomas Kuhn's word for it. Maybe that's built into the system. In many ways that is quite important because, as we've recently said, it's hard to design experiments and research if you don't have a framework to build it on.

But frameworks can also be cages. Dogma and rigid postures may not be good for an actual understanding of Nature, and are costly in terms of wasted research resources. Clinging to an idea leads to clinging to existing projects (often, trying to make them larger and last longer), even when they can, from a disinterested point of view, be seen to be past their sell-by date. Great practical pressures favor that rather than simply saying enough is enough, it's not going to go very far, so let's start investing in something new that may have, or may lead us to insights that have, better prospects. Let's ask different questions, perhaps.

GM failed to respond to such a situation in their persistence to make gas-guzzling SUVs, and oil companies now try to fight off alternative energy sources. So science is not alone. But it's a concern, nonetheless.

Science would be better with less self-assurance, less reward for promotional skills, if experiments were more designed to learn about Nature than to prove pet ideas or provide findings that can be sold in future grant or patent applications. Most null hypotheses being 'tested' are surrogates for set-up studies where at least some of the answer is basically known; for example, when searching for genes causing some disease, our null hypothesis is that there is no such gene. Yet before the study we almost always know there is at least some evidence (such as resemblance among family members). So our degree of surprise should be much less than is usually stated when we reject the null. The doubt we express is less than fully sincere in that sense, but leads to favorable interpretations of our findings that we use to perpetuate the same kind of thinking we began with.

Real doubt, the challenge of beliefs, has a fundamental place in science. Let's nurture it.

Our society is currently commodified, dumbed-down, and rewards self-assurance, assertion, and extreme advocacy--belief. It's reflected in the cable 'news' shouting contests, and the fast-paced media orientation that pervades many areas of our society. There are penalties for circumspection.

Science is part of society and shares its motifs. Scientists are driven by natural human vanities, of course, but also by the fact that as middle-class workers we need salaries, pension funds, and health care coverage. We are not totally disinterested parties, standing by and abstractly watching knowledge of the world accumulate. Academic work is viewed as job-training for students, and research is largely filtered through the lens of practical 'importance'. Understanding Nature for its own sake is less valued than being able to manipulate Nature for gain. This was less prominent in times past (Darwin's time, for example; though at the height of industrialization and empire, when there was certainly great respect for practical science and engineering, he could afford the luxury of pure science).

It is difficult to have a measured discussion about scientific issues--stem cells are a prime example, but equally difficult are discussions about the nature of genomes and what they do, and the nature of life and evolution. Our societal modus vivendi imposes subtle, unstated pressure to take a stand and build a fortress around it. Speakers get invited to give talks based on what people think they're going to say (often, speaking to the already-converted). The hardest sentence to utter is "I don't know." (Another, "I was wrong," is impossible, so it isn't even on the radar).

A theme of our recent postings has to do with what we actually know in science, and what we don't know. It is always true that scientists firmly cling to their theoretical worldview--their paradigm, to use Thomas Kuhn's word for it. Maybe that's built into the system. In many ways that is quite important because, as we've recently said, it's hard to design experiments and research if you don't have a framework to build it on.

But frameworks can also be cages. Dogma and rigid postures may not be good for an actual understanding of Nature, and are costly in terms of wasted research resources. Clinging to an idea leads to clinging to existing projects (often, trying to make them larger and last longer), even when they can, from a disinterested point of view, be seen to be past their sell-by date. Great practical pressures favor that rather than simply saying enough is enough, it's not going to go very far, so let's start investing in something new that may have, or may lead us to insights that have, better prospects. Let's ask different questions, perhaps.

GM failed to respond to such a situation in their persistence to make gas-guzzling SUVs, and oil companies now try to fight off alternative energy sources. So science is not alone. But it's a concern, nonetheless.

Science would be better with less self-assurance, less reward for promotional skills, if experiments were more designed to learn about Nature than to prove pet ideas or provide findings that can be sold in future grant or patent applications. Most null hypotheses being 'tested' are surrogates for set-up studies where at least some of the answer is basically known; for example, when searching for genes causing some disease, our null hypothesis is that there is no such gene. Yet before the study we almost always know there is at least some evidence (such as resemblance among family members). So our degree of surprise should be much less than is usually stated when we reject the null. The doubt we express is less than fully sincere in that sense, but leads to favorable interpretations of our findings that we use to perpetuate the same kind of thinking we began with.

Real doubt, the challenge of beliefs, has a fundamental place in science. Let's nurture it.

Saturday, April 18, 2009

The rear-view mirror and the road ahead

By

Ken Weiss

We've already posted some critiques of the current push for ever-larger genomewide association-style studies of disease (GWAS) which have been promoted by glowing promises that huge-scale studies and technology will revolutionize medicine and cure all the known ills of humankind (a slight exaggeration on our part, but not that far off the spin!). We want to explain our reasoning a bit more.

For many understandable reasons, geneticists would love to lock up huge amounts of research grant resources, for huge amounts of time, to generate huge amounts of data that will be deliciously interesting to play with. But such vast up-front cost commitments may not be the best way to eliminate the ills of humankind. It may not even be the best way to understand the genetic involvement in those ills.

In a recent post we cited a number of our own papers in which we've been pointing out problems in this area for many years, and while we didn't give references we did note that a few others have recently been saying something like this, too. The problem is that searching for genetic differences that may cause disease is based on designs such as comparing cases and controls, which don't work very well for common, complex diseases like diabetes or cancers. Among other reasons this is because, if the genetic variant is common, people without the disease, the controls, may still carry a variant that contributes to risk, but they might remain disease-free because, say, they haven't been exposed to whatever provocative environment is also associated with risk (diet, lack of exercise, etc.). And these designs don't work very well for explaining normal variation.

As we have said, the knowledge of why we find as little as we are finding has been around for nearly a century, and it connects us to what we know about evolutionary genetics. Since the facts apply as well to almost any species--even plants, inbred laboratory mice, and single-celled species like yeast--they must be telling us something about life that we need to listen to!

Part of the problem is that environments interact with many different genes to produce the phenotypes (traits, including disease) in ways that would be good to understand. However, our methods of understanding causation necessarily look backwards in time (they are 'retrospective'): we study people who have some trait, like diabetes, and compare them to age-sex-etc. matched controls, to see how they differ. Geneticists and environmental epidemiologists stress their particular kinds of risks, but the trend recently has strongly been to focus on genes, partly because environmental risk factors have proven to be devilishly hard to figure out, and genetics has more glamour (and plush funding) these days: it may have the sexy appearance of real science, since it's molecular!

Like looking in the rear-view mirror, we see the road of risk-factor exposures that we have already traveled. But what we really want to understand is the causal process itself, and for 'personalized medicine' and even public health we need to look forward in time, to current people's futures. That is what we are promising to predict, so we can avoid all ills (and produce perfect children).

We need to look at the road ahead, and what we see in the rear-view mirror may not be all that helpful. We know that the environmental component of most common diseases contributes far more to risk than any specific genetic factors, probably far more than all genetic factors combined do on their own. We know that clearly from the fact that many if not most common diseases have changed, often dramatically, in prevalence just in the last couple of generations, while we've had very good data and an army of investigators tracking exposures, lifestyles, and outcomes.

Those changes in prevalence are a warning shot across the genetics bow that geneticists have had a very convenient tin ear to. They rationalize these clear facts by asserting that changes in common diseases are due to interactions between susceptible genotypes and these environmental changes. Even if such unsupported assertions were true, what we see in the rear-view mirror does not tell us what the road ahead will be like, for the very simple, but important reason that there is absolutely no way to know what the environmental--the non-genetic--risk factor exposures will be.

No amount of Biobanking will change this, or make genotype-based risk prediction accurate (except for the small subset of diseases that really are genetic), because each future is a new road and risks are inevitably assessed retrospectively. Even if causation were relatively simple and clear, which is manifestly not the case. No matter how accurately we can identify the genotypes of everyone involved (and there are some problems there, too that we will have to discuss another time).

This is a deep problem in the nature of knowledge in regard to problems such as this. It is one sober, not far-out, not anti-scientific, reason why scientists and public funders should be very circumspect before committing major amounts of funding, for decades into the future, to try to track everyone, and everyone's DNA sequences. And here we don't consider the great potential for intrusiveness that such data will enable.

As geneticists, we would be highly interested in poking around in the data mega-studies would yield. But we think it would not be societally responsible data to generate, given the other needs and priorities (some of which actually are genetic), that we know we can address with available resources and on other problems or approaches.

We can learn things by checking the rear-view mirror, but life depends on keeping our eye on the road ahead.

For many understandable reasons, geneticists would love to lock up huge amounts of research grant resources, for huge amounts of time, to generate huge amounts of data that will be deliciously interesting to play with. But such vast up-front cost commitments may not be the best way to eliminate the ills of humankind. It may not even be the best way to understand the genetic involvement in those ills.

In a recent post we cited a number of our own papers in which we've been pointing out problems in this area for many years, and while we didn't give references we did note that a few others have recently been saying something like this, too. The problem is that searching for genetic differences that may cause disease is based on designs such as comparing cases and controls, which don't work very well for common, complex diseases like diabetes or cancers. Among other reasons this is because, if the genetic variant is common, people without the disease, the controls, may still carry a variant that contributes to risk, but they might remain disease-free because, say, they haven't been exposed to whatever provocative environment is also associated with risk (diet, lack of exercise, etc.). And these designs don't work very well for explaining normal variation.

As we have said, the knowledge of why we find as little as we are finding has been around for nearly a century, and it connects us to what we know about evolutionary genetics. Since the facts apply as well to almost any species--even plants, inbred laboratory mice, and single-celled species like yeast--they must be telling us something about life that we need to listen to!

Part of the problem is that environments interact with many different genes to produce the phenotypes (traits, including disease) in ways that would be good to understand. However, our methods of understanding causation necessarily look backwards in time (they are 'retrospective'): we study people who have some trait, like diabetes, and compare them to age-sex-etc. matched controls, to see how they differ. Geneticists and environmental epidemiologists stress their particular kinds of risks, but the trend recently has strongly been to focus on genes, partly because environmental risk factors have proven to be devilishly hard to figure out, and genetics has more glamour (and plush funding) these days: it may have the sexy appearance of real science, since it's molecular!

Like looking in the rear-view mirror, we see the road of risk-factor exposures that we have already traveled. But what we really want to understand is the causal process itself, and for 'personalized medicine' and even public health we need to look forward in time, to current people's futures. That is what we are promising to predict, so we can avoid all ills (and produce perfect children).

We need to look at the road ahead, and what we see in the rear-view mirror may not be all that helpful. We know that the environmental component of most common diseases contributes far more to risk than any specific genetic factors, probably far more than all genetic factors combined do on their own. We know that clearly from the fact that many if not most common diseases have changed, often dramatically, in prevalence just in the last couple of generations, while we've had very good data and an army of investigators tracking exposures, lifestyles, and outcomes.

Those changes in prevalence are a warning shot across the genetics bow that geneticists have had a very convenient tin ear to. They rationalize these clear facts by asserting that changes in common diseases are due to interactions between susceptible genotypes and these environmental changes. Even if such unsupported assertions were true, what we see in the rear-view mirror does not tell us what the road ahead will be like, for the very simple, but important reason that there is absolutely no way to know what the environmental--the non-genetic--risk factor exposures will be.

No amount of Biobanking will change this, or make genotype-based risk prediction accurate (except for the small subset of diseases that really are genetic), because each future is a new road and risks are inevitably assessed retrospectively. Even if causation were relatively simple and clear, which is manifestly not the case. No matter how accurately we can identify the genotypes of everyone involved (and there are some problems there, too that we will have to discuss another time).

This is a deep problem in the nature of knowledge in regard to problems such as this. It is one sober, not far-out, not anti-scientific, reason why scientists and public funders should be very circumspect before committing major amounts of funding, for decades into the future, to try to track everyone, and everyone's DNA sequences. And here we don't consider the great potential for intrusiveness that such data will enable.

As geneticists, we would be highly interested in poking around in the data mega-studies would yield. But we think it would not be societally responsible data to generate, given the other needs and priorities (some of which actually are genetic), that we know we can address with available resources and on other problems or approaches.

We can learn things by checking the rear-view mirror, but life depends on keeping our eye on the road ahead.

Friday, April 17, 2009

Darwin and Malthus, evolution in good times and bad

Yesterday, again in the NY Times, Nicholas Kristof reported on studies of the genetic basis of IQ. This has long been a sensitive subject because, of course, the measurers come from the upper social strata, and they design the tests to measure things they feel are important (e.g, in defining what IQ even is). It's a middle-class way of ranking middle-class people who are competing for middle-class resources. Naturally, the lower social strata do worse. Whether or not that class-based aspect is societally OK is a separate question and a matter of one's social politics: Kristof says no, and so do we, but clearly not everyone has that view and there are still those who want to attribute more mental agility to some races than to others.